ReStyle-MusicVAE: Enhancing User Control of Deep Generative Music Models

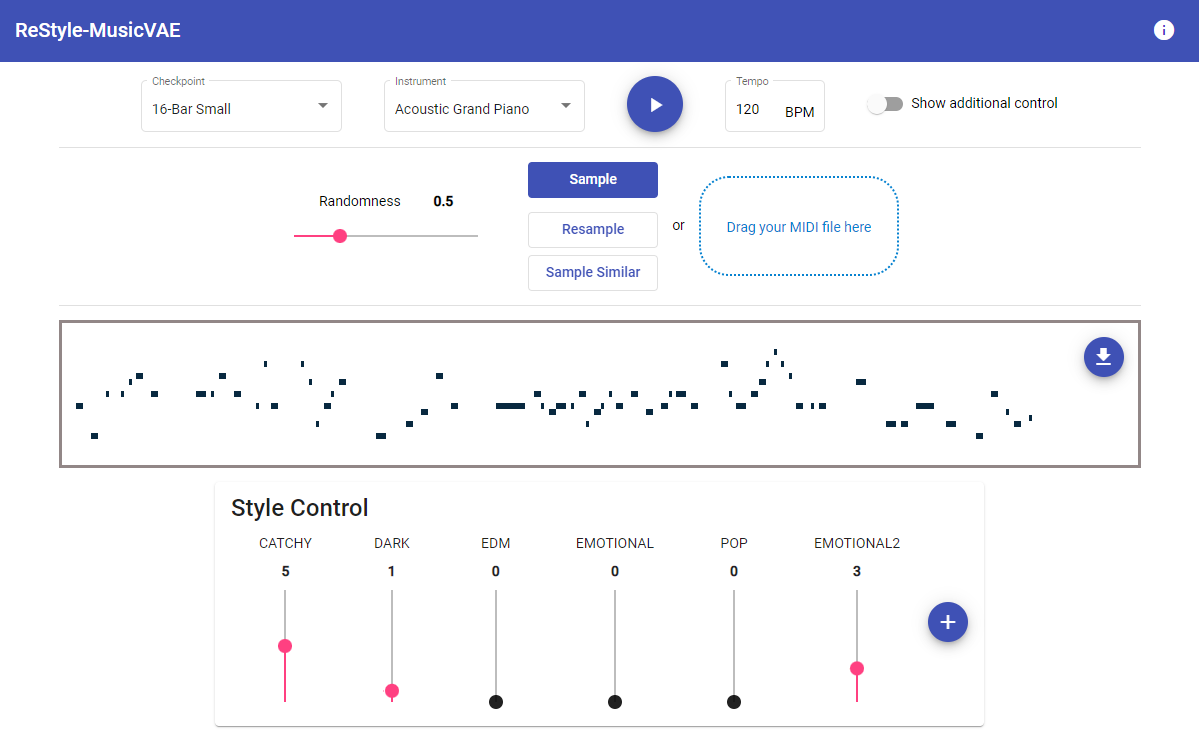

ReStyle-MusicVAE is an AI powered melody composition tool for realtime human-AI co-creation. Additional style control slider allow for interactive style manipulation.

In this article, I’m excited to introduce ReStyle-MusicVAE (https://restyle-musicvae.web.app), an AI powered melody composition tool for realtime human-AI co-creation.

What is it about?

Deep generative models have emerged as one of the most actively researched topics in artificial intelligence. An area that draws increasing attention is the automatic generation of music, with various applications including systems that support and inspire the process of music composition. For these assistive systems, in order to be successful and accepted by users, it is imperative to give the user agency and express their personal style in the process of composition.

ReStyle-MusicVAE (https://restyle-musicvae.web.app) is an interactive melody composition tool for human-AI co-creation with style control mechanisms. ReStyle-MusicVAE combines the automatic melody generation and variation approach of MusicVAE and adds further semantic control dimensions to steer the generation process toward stylistic anchors. This adds a lightweight manipulation mechanism, giving the user a higher degree of control.

Why is it important?

Human-AI interaction with these generative music models is still tedious because the systems usually require retraining (= very expensive, time-consuming, and requires a large volume of quality data) and are still not user-friendly for non-technical users. In order to improve the user control difficulties, a lightweight style control mechanism and an interactive easy-to-use user interface for steerable music generation is introduced.

Additional Resources

- If you want to learn more about this project, please see our research paper.

- The interactive web-app is available here.

- The code for the ReStyle-MusicVAE web-app is available here.

Acknowledgements

I would especially like to thank Peter Knees, and Richard Vogl for all of their contributions, effort, and advice.